Stacking (Stacked Generalization) is an ensemble technique that combines the predictions of multiple diverse models to make a final prediction.

In this example, we demonstrate how to create a stacking ensemble using XGBoost as the level 1 model, which learns from the predictions of the base models (Random Forest, SVM, and KNN) to make the final prediction.

By leveraging the strengths of different algorithms, stacking can potentially improve performance compared to using individual models.

import numpy as np

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import StackingClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from xgboost import XGBClassifier

from sklearn.metrics import accuracy_score

import matplotlib.pyplot as plt

# Generate a synthetic dataset

X, y = make_classification(n_samples=1000, n_classes=2, n_features=20, n_informative=10, random_state=42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Define base models

rf = RandomForestClassifier(n_estimators=100, random_state=42)

lr = LogisticRegression()

knn = KNeighborsClassifier(n_neighbors=5)

# Define the stacking ensemble

base_models = [('rf', rf), ('lr', lr), ('knn', knn)]

xgb = XGBClassifier(n_estimators=100, learning_rate=0.01, random_state=42)

stacking_ensemble = StackingClassifier(estimators=base_models, final_estimator=xgb)

# Train and evaluate the stacking ensemble

stacking_ensemble.fit(X_train, y_train)

y_pred_stacking = stacking_ensemble.predict(X_test)

accuracy_stacking = accuracy_score(y_test, y_pred_stacking)

# Train and evaluate individual models

rf.fit(X_train, y_train)

y_pred_rf = rf.predict(X_test)

accuracy_rf = accuracy_score(y_test, y_pred_rf)

lr.fit(X_train, y_train)

y_pred_lr = lr.predict(X_test)

accuracy_lr = accuracy_score(y_test, y_pred_lr)

knn.fit(X_train, y_train)

y_pred_knn = knn.predict(X_test)

accuracy_knn = accuracy_score(y_test, y_pred_knn)

# Visualize the performance comparison

models = ['Random Forest', 'LR', 'KNN', 'Stacking Ensemble']

accuracies = [accuracy_rf, accuracy_lr, accuracy_knn, accuracy_stacking]

plt.figure(figsize=(8, 6))

plt.bar(models, accuracies)

plt.title('Model Performance Comparison')

plt.xlabel('Model')

plt.ylabel('Accuracy')

plt.ylim(0.8, 1.0)

plt.show()

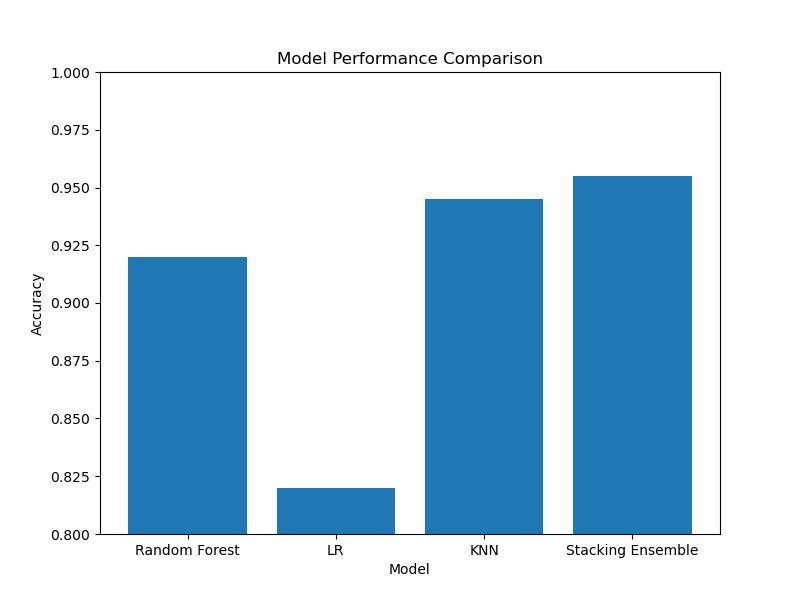

The plot may look like the following:

In this example, we generate a synthetic binary classification dataset using scikit-learn’s make_classification function and split it into train and test sets.

We define three base models: Random Forest, Logistic Regression, and KNN. Then, we create a stacking ensemble using the StackingClassifier from the scikit-learn library, specifying the base models (estimators) and XGBoost as the level 1 model (final_estimator).

We train the stacking ensemble on the training data and evaluate its performance on the test set using accuracy as the metric. We also train and evaluate each individual model for comparison.

Finally, we visualize the performance comparison using a bar plot, which shows the accuracies of the individual models and the stacking ensemble.

By using XGBoost as the level 1 model in a stacking ensemble, we can potentially improve the overall performance compared to using individual models. The stacking ensemble learns to combine the strengths of the diverse base models, allowing it to make more accurate predictions.