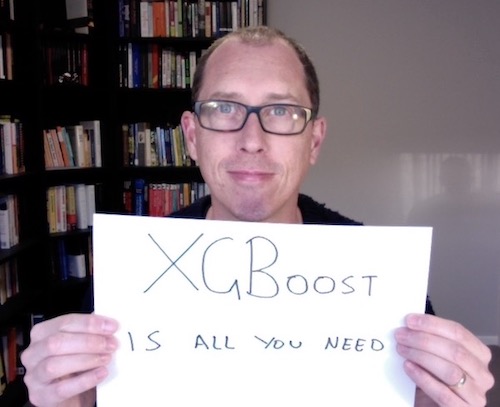

Hi, I’m Jason Brownlee, Ph.D.

Welcome to XGBoosting!

Contact me any time, or let’s connect on LinkedIn and Twitter.

Follow @XGBoostAwesome to get XGBoost tips and tutorials.

XGBoost is Amazing

Extreme Gradient Boosting, or XGBoost for short, is a fast implementation of the Gradient Boosting Machine or GBM algorithm.

It is the library that put GBM on the map.

Before XGBoost, Random Forest was the king of tabular data (i.e. data as it looks in a table, spreadsheet, or CSV file).

No longer.

There’s a reason why “XGboost is all you need” is a meme.

XGBoost typically gives the best predictions for a wide range of classification and regression problems with tabular data, and even related problems like complex time series forecasting.

The problem is, to get the best results from XGBoost often requires good model configuration and good knowledge of the API.

Using XGboost Can Be Challenging

XGBoost is not a fire-and-forget algorithm like Random Forest.

It is a complicated algorithm and effective usage requires both knowledge about what is happening internally and knowledge of the parameters we can use to change the way it works.

There’s lots to know about, not least:

- Predictive modeling on tabular data

- Decision trees for predictive modeling

- Ensemble of decision trees like bagging and random forest.

- Boosting method for ensembles.

- Gradient Boosting Machine’s approach to boosting.

- Extreme Gradient Boosting’s hyperparameters.

- XGBoost API functions, properties, and parameters.

The XGBoost API documentation is a good start, but is terse and sparse on worked examples.

Knowing what the API provides alone is not good enough.

Knowing what the math in the XGBoost paper alone is not enough.

You need to know how to use the API to configure the model for specific datasets or to achieve specific effects.

Thankfully, we can get good results quickly by using recipes.

Welcome to XGBoosting!

This website provides a large number of examples to help you get results with XGBoost, super fast.

This focus is on Python examples over other languages like R or Java. The reason is because Python appears to be the preferred language for data science and machine learning.

Each example provides:

- A complete and working code example that you can copy-and-paste into your project immediately.

- An explanation to give you a feeling for what you need to know about the API and underlying model.

There are a ton of recipes on the site and I’m adding more all the time.

- You can browse examples by category, like model training, model parameter configuration, and model evaluation.

- You can also search for examples by keyword.

Once you find an example you need, you can copy the code directly into your project and read the explanation about how it works and how you can modify it for the specifics of your predictive modeling problem.

There is intentionally a lot of redundancy between the recipes, meaning the same technique may be explained from a few different perspectives. This is helpful as you can approach a complex topic from many different angles.

It’s also a lot of fun, jumping from example to example and learning about the insides and APIs for XGBoost.

Suggest More Examples

XGBoosting provides a TON of examples, but not everything is covered yet.

I’m updating examples and adding new examples all the time.

If you have ideas of examples to add, make a suggestion.

Definition of “XGBoosting”

“XGBoosting” is a portmanteau of “xgboost” the algorithm and code library and the suffix “-ing” denoting a verbal action.

We are “XGBoost-ing” or “XGBoosting” when we are using or applying the xgboost library or algorithm.

I may have invented this definition to name this website, nevertheless, I note that some people on the wild web refer to XGBoost as XGBoosting, perhaps because XGBoost is a contraction of “Extreme Gradient Boosting”. I also like this reading. XGBoost could just as easily have been named “XGBoosting” (and AdaBoost named “AdaBoosting”) to focus on the verb rather than the noun sense of an ensemble that uses the boosting procedure.

The phrase “XGBoosting” does not appear in the official paper, GitHub repository, or API documentation, at least according to a quick search.

Why?

Why did I create this site?

Because, like you, I copy-and-paste code to get started on new projects.

The problems is, the examples out on the wild web mostly suck:

- They’re wrong wrong wrong.

- They use an old version of the XGBoost or sklearn API.

- They don’t execute.

- They require obscure third-party libraries or web services.

- etc.

I wanted: 1) a very large corpus of consistent XGBoost examples 2) that are correct, 3) that use the modern API and most importantly 4) have a complete and standalone executable version of the code that I can copy-and-paste into my project to get started.

I created the corpus for myself, then upon urging from some friends, decided to share it via a this website.

This is how XGBoosting came to be.

I hope you find something useful. Let me know if you do, it will mean the world!

Jason Brownlee.