XGBoost allows you to monitor multiple evaluation metrics during training, providing a more comprehensive view of your model’s performance.

This example demonstrates how to configure XGBoost to use multiple eval_metric values and retrieve the results using both the scikit-learn and native XGBoost APIs.

By specifying a list of evaluation metrics, you can track different aspects of your model’s performance simultaneously. This is particularly useful when you want to balance multiple objectives or gain insights into various performance characteristics.

Here’s an example that showcases the configuration and usage of multiple evaluation metrics in XGBoost:

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from xgboost import XGBClassifier

import xgboost as xgb

import matplotlib.pyplot as plt

# Generate a synthetic multi-class classification dataset

X, y = make_classification(n_samples=1000, n_classes=3, n_informative=5, random_state=42)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Configure XGBoost with multiple evaluation metrics (scikit-learn API)

eval_metrics = ['merror', 'mlogloss', 'auc']

clf_sklearn = XGBClassifier(n_estimators=100, eval_metric=eval_metrics, random_state=42)

clf_sklearn.fit(X_train, y_train, eval_set=[(X_test, y_test)])

# Retrieve evaluation metric results

results_sklearn = clf_sklearn.evals_result()

# Plot the evaluation metric results

fig, ax1 = plt.subplots(1, 1, figsize=(12, 4))

for metric in eval_metrics:

ax1.plot(results_sklearn['validation_0'][metric], label=metric)

ax1.set_xlabel('Iteration')

ax1.set_ylabel('Metric Value')

ax1.set_title('Scikit-learn API')

ax1.legend()

plt.tight_layout()

plt.show()

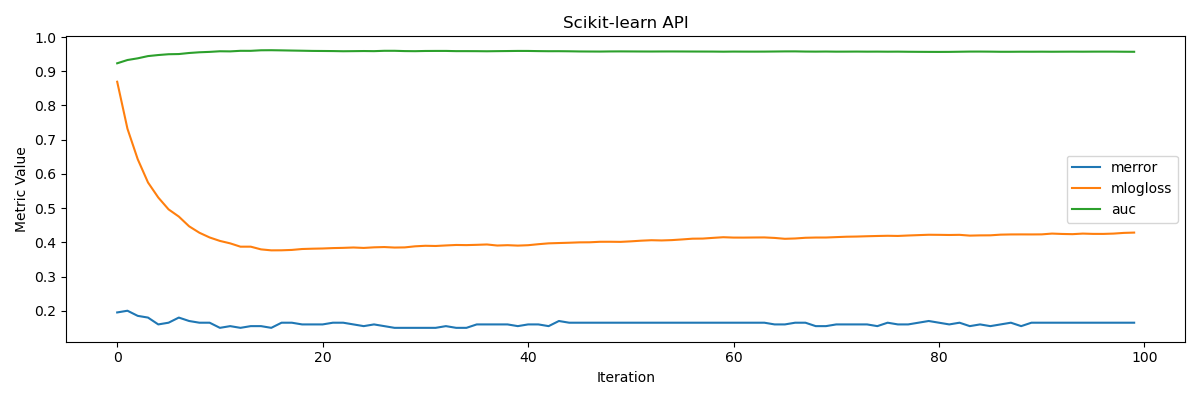

The generated plot may look like the following:

In this example, we generate a synthetic multi-class classification dataset using make_classification from scikit-learn. We then configure XGBoost with a list of evaluation metrics ('merror', 'mlogloss', and 'auc') using the scikit-learn.

We pass the list of metrics to the eval_metric parameter of XGBClassifier.

After training the model, we retrieve the evaluation metric results using evals_result() for the scikit-learn API.

Finally, we plot the progress of each evaluation metric across the training iterations for both APIs.

By configuring XGBoost to use multiple evaluation metrics, you can gain a more comprehensive understanding of your model’s performance and make informed decisions based on the metrics that matter most for your specific problem.